The online Tesla fan, owner and investor ecosystem has been roiled by controversy over the last week, as the latest changes to pricing and options appear to punish the early adopters of Tesla's "Enhanced Autopilot" and "Full Self-Driving" options. Conflict between the betrayed early adopters and the defenders of Tesla's decision has been so intense that the forums that cater to Tesla fandom have had to heavily

moderate and consolidate the heated discussions, address attacks on the communities themselves, and issue calls for de-escalation and reconciliation. Even Electrek, an outlet that has elevated shilling for Tesla to a way of life, came out against the latest price cut calling it a "bait-and-switch" that "does a disservice to early buyers" and documenting a full-blown protest by Chinese Tesla customers.

Having found myself on the receiving end of the Tesla fan community's wrath before, I'd be lying if I said I wasn't a little bemused to see its fundamentalist intolerance of criticism, paranoia and righteous fury turned on itself for a change. The controversy itself is mildly interesting in that it inflames the old divisions between owners and investors, and exposes the tension between Tesla's need for profits and its world-changing mission, but it's not nearly as interesting as, well, almost everything else about Autopilot and "Full Self-Driving." For a group of people who are quick to frame their support of Tesla in terms of its altruistic mission, Tesla fans seem a lot more worked up about an issue that hits their pocketbooks than any of the literal life-and-death issues around Tesla's automated driving program.

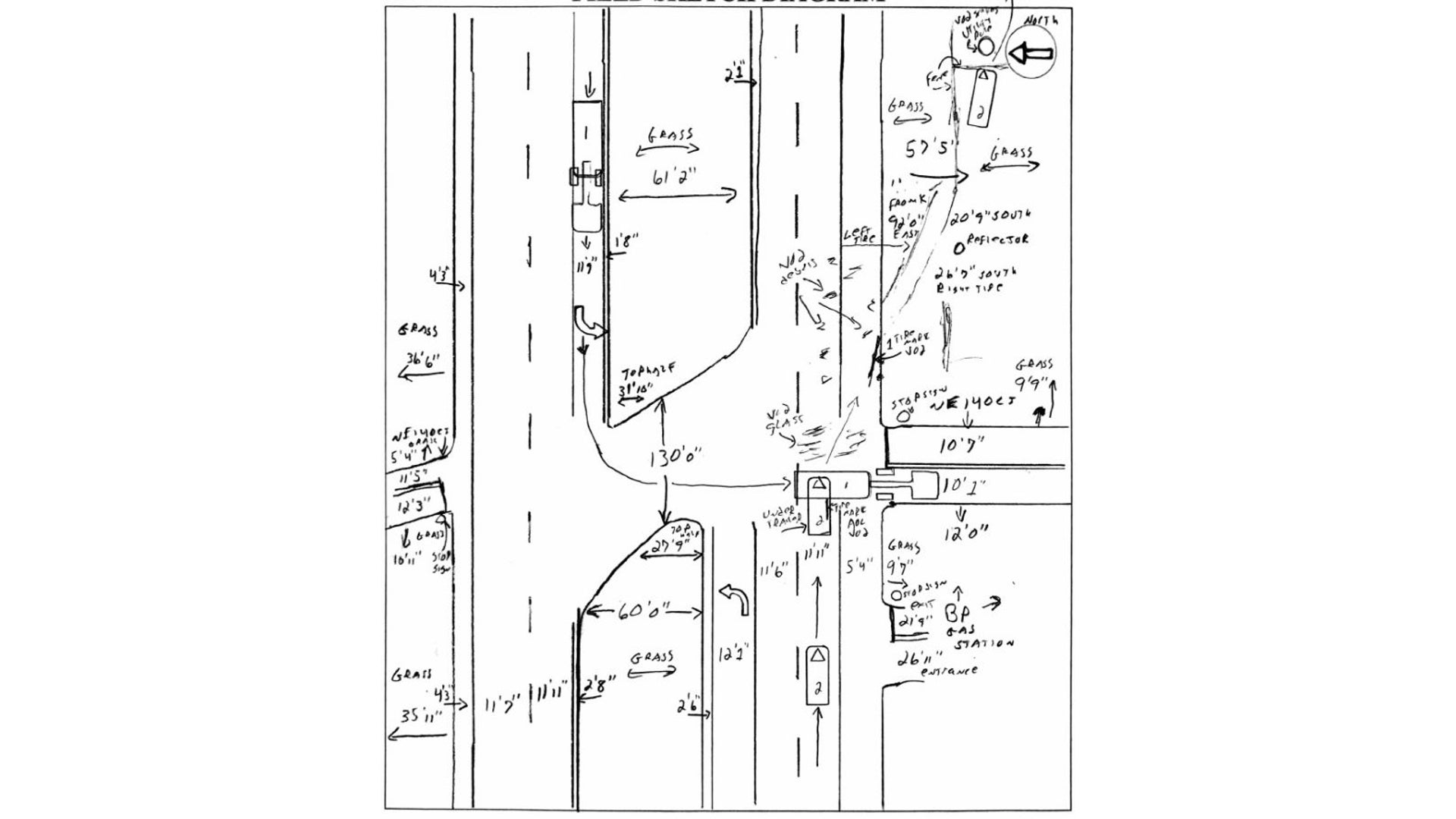

The fact that automated driving systems are a matter of life was punctuated again this week by the death of a Tesla driver named Jeremy Beren Banner, in a crash that appears to be terrifyingly similar to the one that killed Joshua Brown in early 2016. Like the Brown crash, the Banner incident involved a Tesla Model S on a divided road with occasional cross-traffic being driven directly into the side of a crossing semi truck, shearing off its roof and then continuing to drive for some distance after the collision. The National Highway Traffic Safety Administration and the National Transit Safety Board are investigating the crash, particularly whether the Autopilot system contributed to the fatality, as the NTSB found that it did in the Brown crash.

The striking similarities between the recent crash that killed Banner and the 2016 Brown crash means this latest investigation could have enormous consequences for the future of Tesla's Autopilot feature. In its investigation of the Brown case, the NTSB found that two aspects of Autopilot's design had contributed to the fatal crash: a lack of "operational design domain" limits, essentially the ability to activate Autopilot even on roads where Tesla says it isn't safe, and the lack of sufficient driver attention monitoring. These findings ultimately went nowhere, as the NTSB has no regulatory power and NHTSA's report that found Autopilot reduces crashes by 40%, instantly putting public concerns about Autopilot on ice. With NHTSA's report only recently exposed as a whitewash based on bad data and worse analysis, another Autopilot-related fatality under similar circumstances could put enormous pressure on the regulator to act. But then, so too could an ongoing NTSB investigation into the death of Walter Huang, yet another instance (along with Gao Yaning in China) of a Tesla on Autopilot driving into a stationary object at high speed.

Or, to be as precise as possible, the Teslas were in fact being driven into stationary objects at high speed by Huang and Brown, who Tesla says were responsible at all times for their own safety. This legal disclaimer has been Tesla's first and last line of defense against criticisms of Autopilot, supplemented only by consistently misleading and easily-debunked statistical comparisons intended to prove that Autopilot is somehow also safer than human drivers. How Autopilot can be both intrinsically safer than any human driver and simultaneously utterly dependent on the human driver to keep the vehicle safe is a question Elon Musk recently had to dance around when asked by TechCrunch's Kirsten Korosec if it might be "a problem that you're calling this full self-driving capability when you're still

going to require the driver to take control or be paying attention" [disclosure: Korosec is my cohost at The Autonocast and a friend].

Musk's full reply (via transcript provided by Paul Huettner on Twitter) rhumbas right past the contradiction staring him in the face and pirouettes into more familiar territory:

Musk's aspiration to someday transcend this grotesquely absurd contradiction at the heart of "Full Self-Driving" is... aspirational, but it doesn't exactly answer Korosec's question. If anything it only recalls Musk's long record of wildly optimistic predictions about "Full Self-Driving," including that it would arrive on at least six different points in 2017, 2018 and 2019 before settling on the most recent prediction of late 2020. If you do believe that he finally got it right this time, "Full Self-Driving" still won't actually be what it says on the tin for nearly two more years. Even then it won't be the SAE Level 5 system he said "Full Self-Driving" would be when he introduced the option in 2016.

The reason Musk can only dance around Korosec's question was hammered home when a former Autopilot team member turned my question back on me: what would you say in that position? Admitting that Autopilot presented certain risks without driver monitoring and hard operational domain limits could give rise to massive legal liabilities of all kinds. Even then a complete fleet retrofit including integrated interior would be ruinously expensive. And that's before any reputational impact for Musk, any demand impact on Tesla, or any financial impact from the loss of a gross margin booster.

The closest Musk has strayed towards this impossible choice was in Tesla's earnings call for the first quarter of 2018, when he admitted:

With every new video of someone apparently napping in a Tesla on a California freeway that floats across my Twitter feed, Musk's admission seems more and obvious. It's not a lack of understanding, it's thinking you know more than you do. To Musk these must seem to be unconnected phenomena, but I can't tell the difference. Does the distinction explain why exactly someone falls asleep behind the wheel or otherwise fails to see the large stationary object they are rapidly driving toward? If not, the good news is that gaze tracking technology actually does work (contra Musk's bizarre assertion that it was rejected because it's ineffective and not because it cost too much) andThe Autance's own Alex Roy has already eloquently explained why its omission wasn't worth whatever cost it saved.

Musk said on the last quarterly call that autonomous drive technology's potential to save lives made it part of the "goodness" of Tesla, but it increasingly looks like Tesla's ugliest side. Cadillac's SuperCruise has the hard operational domain limits and driver monitoring that the NTSB says could have prevented Joshua Brown from driving into a truck at high speed, and it doesn't have a single crash on record let alone a fatality. If NTSB finds more evidence that these systems could have prevented the deaths of Walter Huang and even Jeremy Banner, Musk's continued insistence that actual, real "Full Self-Driving" with no need for human oversight is actually coming soon, really, won't matter. NHTSA's own enforcement guidance, published after its Josh Brown whitewash report, makes it clear:

This enforcement precisely describes the exact problem identified in the Josh Brown crash. If the NTSB's Walter Huang and Jeremy Banner investigations come to similar findings, NHTSA will have little choice but to act according to their own guidance. If that happens, anyone who spent money on one of Tesla's automated driving systems, whether Autopilot or "Full Self-Driving" is likely to regret the decision. Of course, even if you do "overpay" for Autopilot or "Full Self-Driving" only for NHTSA to yank it off the streets, that's still better than slowly growing comfortable with the system until you suddenly realize that you are driving at high speed toward a stationary object and it's too late to do anything about it.