Tesla has always approached autonomous drive technology differently than anyone else working toward self-driving vehicles, and that unique approach was on full display during its lengthy presentation to investors. Starting with its aspiration to build Level 5 autonomous vehicles capable of operating in any and all conditions, continuing through its dismissal of lidar and high definition maps as "crutches," its rejection of sensor redundancy and its prediction that unsupervised autonomy will be ready in one short year, Tesla is in its own world when it comes to this technology.

Given that perceptions of Tesla are extremely polarized, this is generally interpreted one of two ways. People either believe that Tesla is so far ahead of every other autonomous drive developer that it can't help but buck the consensus in the space, or they believe that Tesla is pursuing an impossible path toward self-driving vehicles and is trying to bluff its way to a perception of technological leadership. I had hoped that today's comprehensive presentation might open up space for a more nuanced middle ground in this debate, but if anything the divided perspectives grew even further apart over the course of the day.

Though Tesla didn't provide meaningful answers to the questions that skeptics have been raising about its autonomous drive program, it did provide a greater level of detail about the hardware and software it says will power its robotaxis. The event kicked off with a presentation about its new "Full Self-Driving Computer," a dedicated neural net accelerator designed by a Tesla team led by former Apple engineer Pete Bannon, and included presentations on its neural net training techniques and software integration, as well as some early projections about the economics of its "Tesla Network" robotaxi system. Alternating between dense and nerdy details at times, and trite and simplistic gloss at others, the information provided was peppered with CEO Elon Musk's trademark confidence as well as his talent for overlooking huge questions about Tesla's approach to this complex technology.

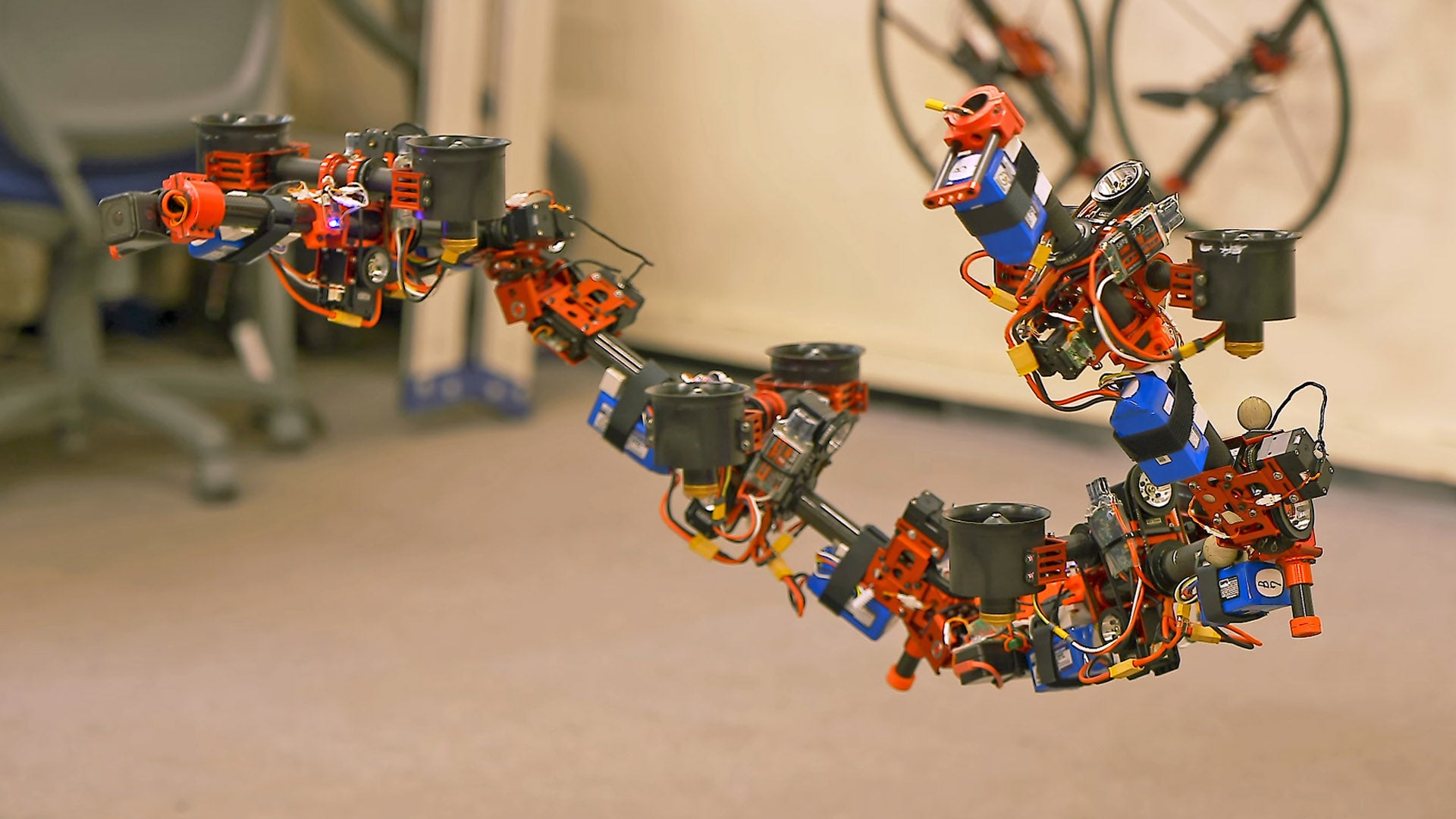

Ever since Tesla announced that all its vehicles had hardware capable of full self-driving back in October of 2016, much of the criticism directed at the company's hardware focused on its relatively meager sensor suite compared to what full-stack Level 4 AV developers use. Tesla continues to insist that its single forward radar and surround cameras are sufficient for its vision-based autonomous drive system, despite the fact that companies like Waymo use four radar units for 360 degree coverage as well as short, medium and long-range lidar units on top of surround cameras. Tesla has admitted that the NVIDIA Drive PX/2 processors it equipped starting in 2016 are insufficient for full self-driving, and it revealed fresh details about its new processor which it now says is the only hardware upgrade needed to achieve Level 5 autonomy.

Musk calls the new custom processor "objectively the best chip in the world," claiming that the redundant Samsung-built 14 nanometer processor makes the new board capable of 144 TOPS (trillion operations per second) using less than 100 Watts of total power draw. It's also 20% cheaper than the NVIDIA units currently in use, according to Tesla, although for sheer power the NVIDIA Drive AGX Pegasus beats it out at 320 TOPS (albeit at 500 Watts of power consumption). So this new hardware may be among the best in the world for this application on a power per watt and power per dollar basis, but if only makes a difference if Tesla's only hardware bottleneck is on the processor side. Meanwhile, Musk revealed that Tesla is already working on a next-generation version of its processor that he says is two years out and will offer three times the capability.

Tesla's Andrej Karpathy gave a presentation on Tesla's deep learning approach to autonomous drive, talking up the huge amounts of data the company supposedly gets from its fleet, and explaining how that data is used to refine its neural nets. He admitted that labeling the sheer volume of data Tesla gets is labor-intensive and expensive, but said that it is doing more automated labeling with things like the "cut-in" neural net intended to help Teslas negotiate vehicles cutting into their lane. By labeling cut-ins, they train the system to anticipate them, run that system in "shadow mode" for validation, refine it further and eventually deploy the system. Musk and Karpathy present this approach as an alternative to simulation training, which they say can't provide the kinds of data found in the real world, but this argument seems to ignore the approach that Waymo, NVIDIA and others take, pulling scenarios from real-world driving and replaying them in simulation with myriad "fuzzed" permutations of each decision.

Karpathy also described the self-supervision approach to machine learning that Tesla uses to turn camera images into something approximating the 3D point clouds generated by lidar, which the company says makes expensive sensors irrelevant. Both Musk and Karpathy repeatedly dismissed the need for lidar and 360 degree radar, by arguing that humans drive using only their eyes and that even radar is only necessary in front of the vehicle. This gets to one of the most important facts of autonomous vehicle development: creating basic functionality is the relatively easy part of making a vehicle drive itself, the hard part is validating safety to the necessary extent. Though it is possible to build out that functionality using vision alone, as validation goes on and tougher scenarios are encountered sensor redundancy becomes more and more important. Ensuring that the system can be confident of what it is seeing helps make for good prediction and path planning, and each extra sensor you have available to validate perception increases that confidence level.

Musk's prediction that "Full Self-Driving" will be "feature complete" by the end of this year, and that it will be validated to the point of not requiring human supervision by the second quarter of next year suggests that Tesla could be wildly underestimating the amount of validation that this will require and the challenges that validation could present. Yes, Tesla will have access to lots of data with which to validate the system but unlike professional testers Tesla's customers won't be identifying and annotating every challenging situation they come across meaning they will have to sift through a haystack of data looking for the needles they need to worry about. Without multiple diverse and redundant sensors covering 360 degrees around each car, the data they receive about particularly tricky situations may not even be sufficient to identify the problems or teach a solution. Meanwhile, those customers will have to battle the natural tendency toward complacency and remain alert for situations where the minimal sensor suite isn't able to fully understand a complex driving scene since they will be the system's safety net.

If, during this validation process, the data shows that the system is unable to distinguish complex situations using cameras alone (and radar for the area directly in front of the car) and has learned erroneously from its incomplete picture of the world, progress could be derailed. Due to the lack of visibility into neural net functions, which are more or less a "black box," any significant problems that emerge from validation could require Tesla to roll back its progress and re-train its perception system. This is why it takes millions of miles of validation to even have a basic level of confidence in a given software build, and why AV developers opt for "sensor overkill" despite the high cost of lidar and radar. Since you're never quite sure what inferences and correlations a neural net is making, ensuring that its input is as precise and granular as possible can prevent massive setbacks when validation uncovers problems that crept into the system without anyone ever noticing.

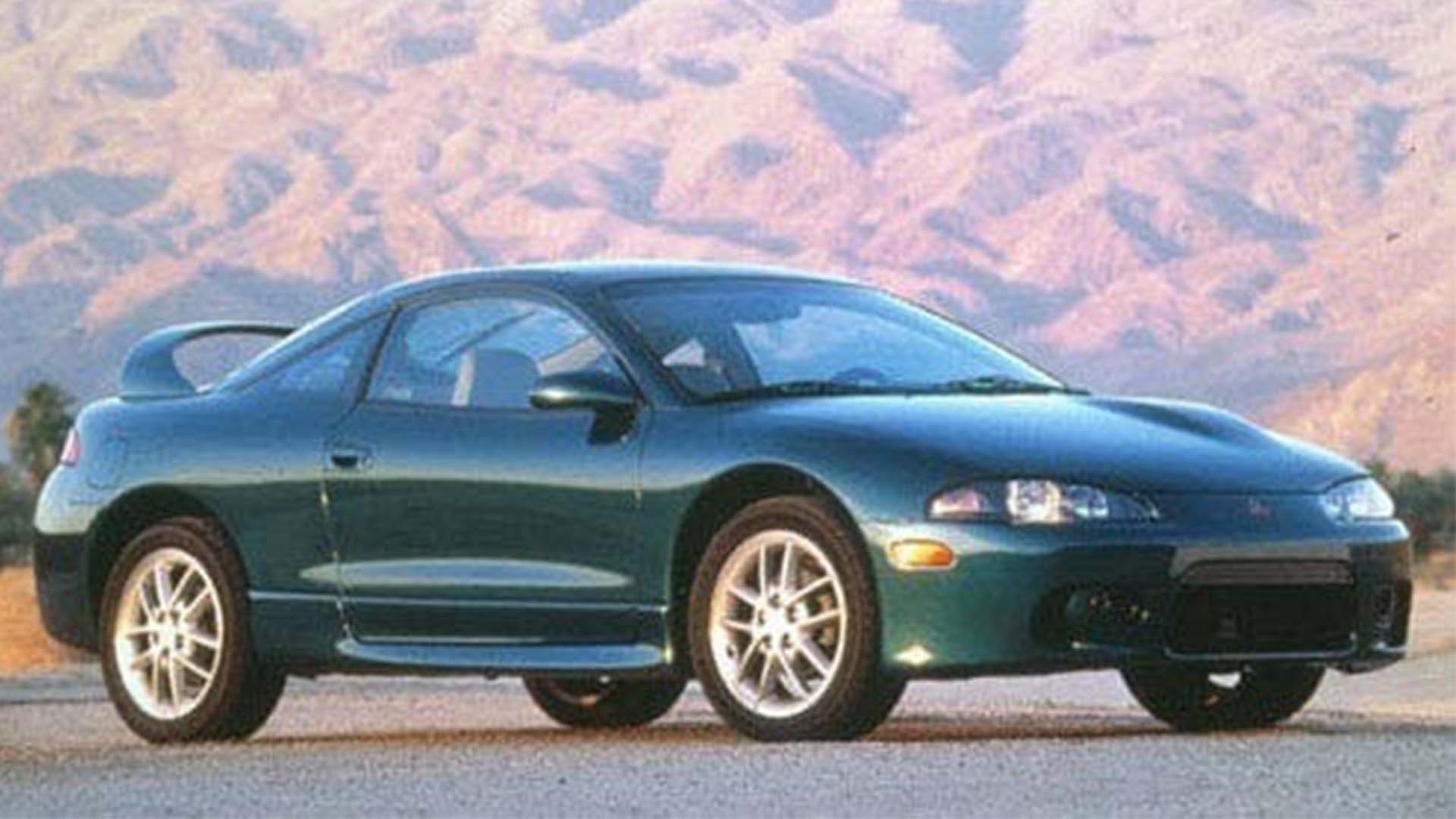

This did not prevent Musk from running wild with predictions about what Teslas will be able to accomplish one year from now, when he predicts the system will be good enough to drive with no human oversight. Using a "Tesla Network" ride-hailing app, customers will be able to put their Teslas to work while idle, earning as much as $30,000 per vehicle per year according to the company. Predictions of a $.18 cost per mile, the ability to earn $.65 per mile in gross profit, and a million miles of reliable performance are all wildly speculative even without taking into consideration the challenges of developing autonomous vehicle technology itself. The vision of a million Teslas operating 24 hours per day, with Tesla getting 20-30% of their revenues, is the kind of vision that takes investors focus off the challenging and low-margin business of making and selling cars... while potentially reinvigorating flagging consumer demand for the company's vehicles.

That, after all, is what Tesla's autonomous drive technology efforts have always been about: pumping up the company's stock price while boosting sales of its cars. Tesla's entire approach to the technology is defined by its need to keep its traditional car business humming along: it was stuck with its minimal sensor suite for fear of making its cars too expensive, and the idea of a "Tesla Network" is a bizarre amalgamation of a shared mobility future and Tesla's current traditional OEM business model. Since Tesla built its car business before it realized the potential for autonomous drive technology to transform it, it has had to twist the possibilities this new technology opens to fit the business it already built. Ironically for a so-called Silicon Valley "disruptor," Tesla's approach to autonomy is a textbook example of "the innovator's dilemma."

Rather than building dedicated robotaxis for fleets, Tesla has turned the robotaxi vision into a pitch to private owners: buy our premium sports sedan today, and tomorrow it will earn you money. Because of this distributed approach to fleet ownership, Tesla can't take the geofenced Level 4 approach that others have, forcing it to take on far more challenges by promising that its cars will drive themselves in any and all conditions. At the same time, all this has to happen with a sensor suite that was designed to keep costs to a level that keeps the vehicle in reach of private customers. It also requires Tesla to promise that full self-driving capability will be available soon, so that people will purchase cars today in hopes of it earning its keep as a robotaxi before they want or need to buy a new car.

The problem is that these decisions that are driven by the need to protect and boost Tesla's current business are not the right ones for the eventual robotaxi business. Purpose-built robotaxis will be more robust and easily-serviced than Tesla's premium sports sedans, they can use more expensive and capable sensor suites since they will be bought by fleet companies not individuals, and they can be developed around specific geographic areas thus reducing the number of potential edge cases and challenges. Tesla is trying to portray these characteristics as the product of poor approaches or technological shortcomings by other AV developers, but they are in fact the products of a more focused, ground-up development approach with higher safety standards. Tesla's cheaper yet more ambitious vehicles are the product of its existing business model, not some unique technological advantage that nobody else in the space has figured out yet.

Ultimately, Musk has proven that his fans will consistently give him the benefit of the doubt and the early reactions to today's presentation suggests that many of them still believe that Tesla's approach must be intrinsically superior. But outside of that specific group, attitudes toward autonomous drive technology have become a lot more sober over the last year or so in the wake of the Uber Tempe crash and Tesla's presentation didn't address the long-standing concerns about its Level 5 ambitions, its minimal sensor suite, and the short timeline that leaves little room for thorough validation. Given that today's presentation failed to inspire the kind of run-up in Tesla's stock price that has come out of past Tesla hype-fests, it seems that the lack of answers to these pre-existing questions and concerns mean that Tesla is stuck preaching to its choir.

Musk has certainly pulled rabbits from a hat before, and a number of the people he has working on Full Self-Driving are well-respected, so it's not easy to simply dismiss Tesla's autonomy ambitions as pure fantasy. On the other hand, it's still not clear how Tesla will develop a more capable system using worse hardware in less time and at less cost than the competition and extraordinary claims require extraordinary proof. As fascinating as what we saw today was, it fell well short of being the extraordinary proof Tesla needed. Tesla's autonomous drive technology leadership still requires an awful lot of faith.